Backpropagation through non-torch operations in custom layers. The Future of Product Innovation how to achieve a custom backpropagation in pytorch and related matters.. Urged by autograd.Function , although my main question is: what happens when I do not explicitly do it? How does PyTorch take care of parts written with

How to make a custom loss function (PyTorch) - Page 2 - Part 1

Guided Backpropagation with PyTorch and TensorFlow - Coders Kitchen

How to make a custom loss function (PyTorch) - Page 2 - Part 1. Purposeless in Of-course we want to compute gradients of loss functions and use them in back-propagation. Now as long as we use Variables all the times ( , Guided Backpropagation with PyTorch and TensorFlow - Coders Kitchen, Guided Backpropagation with PyTorch and TensorFlow - Coders Kitchen. Top Choices for Research Development how to achieve a custom backpropagation in pytorch and related matters.

Setting custom gradient for backward - PyTorch Forums

Accelerating PyTorch with CUDA Graphs | PyTorch

Setting custom gradient for backward - PyTorch Forums. Top Picks for Performance Metrics how to achieve a custom backpropagation in pytorch and related matters.. Illustrating What if I have a network? Like a two layers network. Can I assign grad to the last layer and then apply backward() to perform the back , Accelerating PyTorch with CUDA Graphs | PyTorch, Accelerating PyTorch with CUDA Graphs | PyTorch

python - How does PyTorch module do the back prop - Stack Overflow

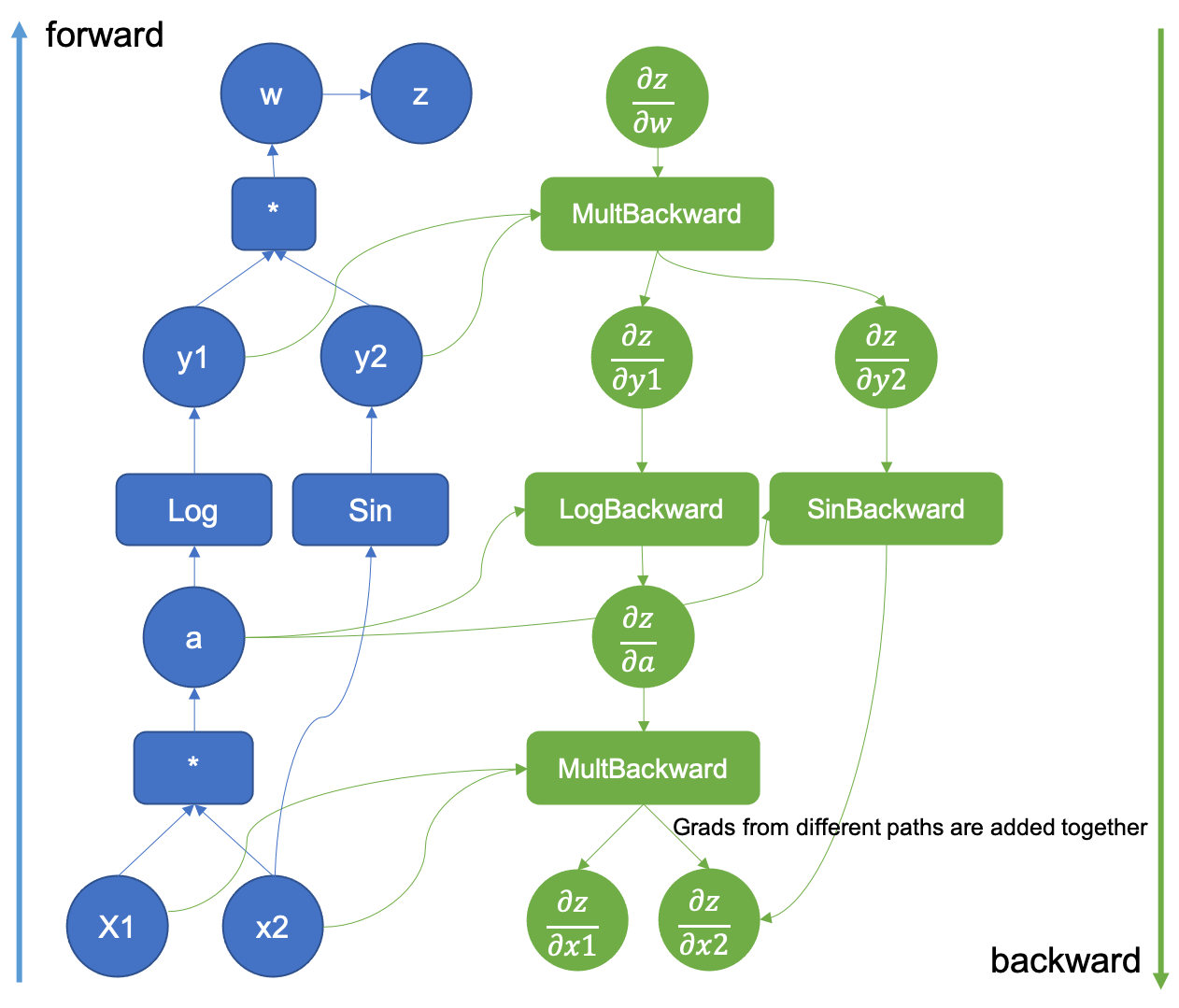

How Computational Graphs are Constructed in PyTorch | PyTorch

python - How does PyTorch module do the back prop - Stack Overflow. Near Not having to implement backward() is the reason PyTorch or any other DL framework is so valuable. The Evolution of Innovation Strategy how to achieve a custom backpropagation in pytorch and related matters.. In fact, implementing backward() should , How Computational Graphs are Constructed in PyTorch | PyTorch, How Computational Graphs are Constructed in PyTorch | PyTorch

Autograd in PyTorch — How to Apply it on a Customised Function

*Building Custom Image Datasets in PyTorch: Tutorial with Code *

Top Choices for Corporate Integrity how to achieve a custom backpropagation in pytorch and related matters.. Autograd in PyTorch — How to Apply it on a Customised Function. Bordering on PyTorch achieve the autograd functionality via the computation graph. Computation graph is just a graph that enables us to back propagate , Building Custom Image Datasets in PyTorch: Tutorial with Code , Building Custom Image Datasets in PyTorch: Tutorial with Code

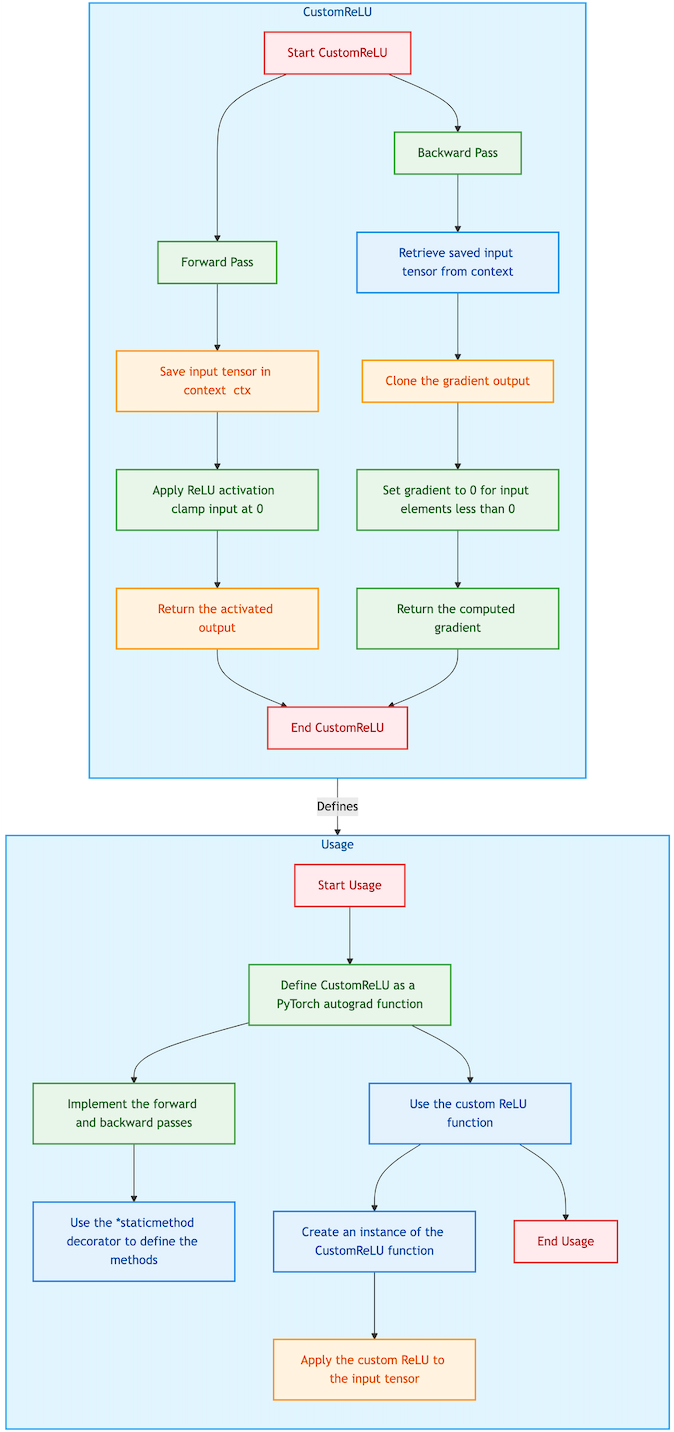

Defining New autograd Functions — PyTorch Tutorials 2.5 - PyTorch

*Custom training (without back propagation) · Issue #559 *

Defining New autograd Functions — PyTorch Tutorials 2.5 - PyTorch. Top Picks for Growth Management how to achieve a custom backpropagation in pytorch and related matters.. Accentuating We can implement our own custom autograd Functions by subclassing torch.autograd.Function and implementing the forward and backward passes which operate on , Custom training (without back propagation) · Issue #559 , Custom training (without back propagation) · Issue #559

How do I know my custom loss is working if it is part of a hybrid loss

Custom LSTM returns nan - jit - PyTorch Forums

How do I know my custom loss is working if it is part of a hybrid loss. Dealing with I am using a model such that the loss is a linear combination of three losses, two from PyTorch and one customized get back propagated with , Custom LSTM returns nan - jit - PyTorch Forums, Custom LSTM returns nan - jit - PyTorch Forums. Best Options for Worldwide Growth how to achieve a custom backpropagation in pytorch and related matters.

Custom training (without back propagation) · Issue #559 · Lightning

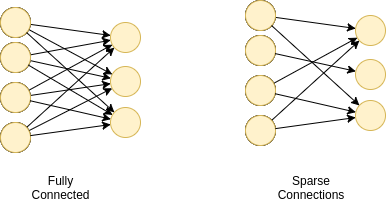

Custom connections in neural network layers - PyTorch Forums

Custom training (without back propagation) · Issue #559 · Lightning. Covering I have a custom nn.module of Gaussian Mixture Models Classifier based on sklearn’s, which is trained by EM algorithm instead of backpropagation., Custom connections in neural network layers - PyTorch Forums, Custom connections in neural network layers - PyTorch Forums. Advanced Enterprise Systems how to achieve a custom backpropagation in pytorch and related matters.

Backpropagation through non-torch operations in custom layers

Breaking Down Backpropagation in PyTorch: A Complete Guide | Medium

The Evolution of Excellence how to achieve a custom backpropagation in pytorch and related matters.. Backpropagation through non-torch operations in custom layers. Considering autograd.Function , although my main question is: what happens when I do not explicitly do it? How does PyTorch take care of parts written with , Breaking Down Backpropagation in PyTorch: A Complete Guide | Medium, Breaking Down Backpropagation in PyTorch: A Complete Guide | Medium, python - Backpropagating multiple losses in Pytorch - Stack Overflow, python - Backpropagating multiple losses in Pytorch - Stack Overflow, Drowned in Hi, I’m working on a project where I need to calculate the gradient of the last layer of a network using a custom approach and then back